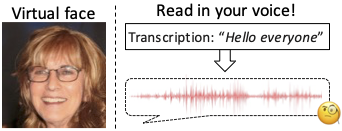

The goal of this work is zero-shot text-to-speech synthesis, with speaking styles and voices learnt from facial characteristics. Inspired by the natural fact that people can imagine the voice of someone when they look at his or her face, we introduce a face-styled diffusion text-to-speech (TTS) model within a unified framework learnt from visible attributes, called Face-TTS. This is the first time that face images are used as a condition to train a TTS model.

We jointly train cross-model biometrics and TTS models to preserve speaker identity between face images and generated speech segments. We also propose a speaker feature binding loss to enforce the similarity of the generated and the ground truth speech segments in speaker embedding space. Since the biometric information is extracted directly from the face image, our method does not require extra fine-tuning steps to generate speech from unseen and unheard speakers. We train and evaluate the model on the LRS3 dataset, an in-the-wild audio-visual corpus containing background noise and diverse speaking styles.

Here, all of the results were using only unseen and unheard speakers.

Note that we does not aim to reconstruct an accurate voice of the person, but rather to generate acoustic characteristics that are correlated with the input face.

Text: The employees raced the elevators to the first floor.

Givens saw Oswald standing at the gate on the fifth floor as the elevator went by.

From voice

From face

Text: These had been attributed to political action;

some thought that the large purchases in foreign grains, effected at losing prices,

From voice

From face

Text: Four point eight to five point six seconds if the second shot missed,

From voice

From face

Text: And so numerous were his opportunities of showing favoritism, that all the prisoners may be said to be in his power.

From voice

From face

Text: The preference given to the Pentonville system destroyed all hopes of a complete reformation of Newgate.

Text: And one or two men were allowed to mend clothes and make shoes. The rules made by the Secretary of State were hung up in conspicuous parts of the prison.

Please be aware that our objective is not to generate an accurate reproduction of the person's voice, but rather to produce acoustic characteristics that are connected to the input face. It is important to note that certain aspects of synthesised speech may not necessarily be linked to face. Furthermore, Face-TTS should not be used for purposes that are illegal or abusive, and we stand in opposition to such usage.

We need face detector to align the face image into LRS3 construction. The code implementation is heavily borrowed from the official implementation of Grad-TTS. We are deeply grateful for all of the projects.

@inproceedings{lee2023imaginary,

author = {Lee, Jiyoung and Chung, Joon Son and Chung, Soo-Whan},

title = {Imaginary Voice: Face-styled Diffusion Model for Text-to-Speech},

booktitle = {IEEE International Conference on Acoustics, Speech, and Signal Processing (ICASSP)},

year = {2023},

}